Uncertainty-aware Prototype Learning with Variational Inference for Few-shot Point Cloud Segmentation

Abstract

Few-shot 3D semantic segmentation aims to generate accurate semantic masks for query point clouds with only a few annotated support examples. Existing prototype-based methods typically construct compact and deterministic prototypes from the support set to guide query segmentation. However, such rigid representations are unable to capture the intrinsic uncertainty introduced by scarce supervision, which often results in degraded robustness and limited generalization.

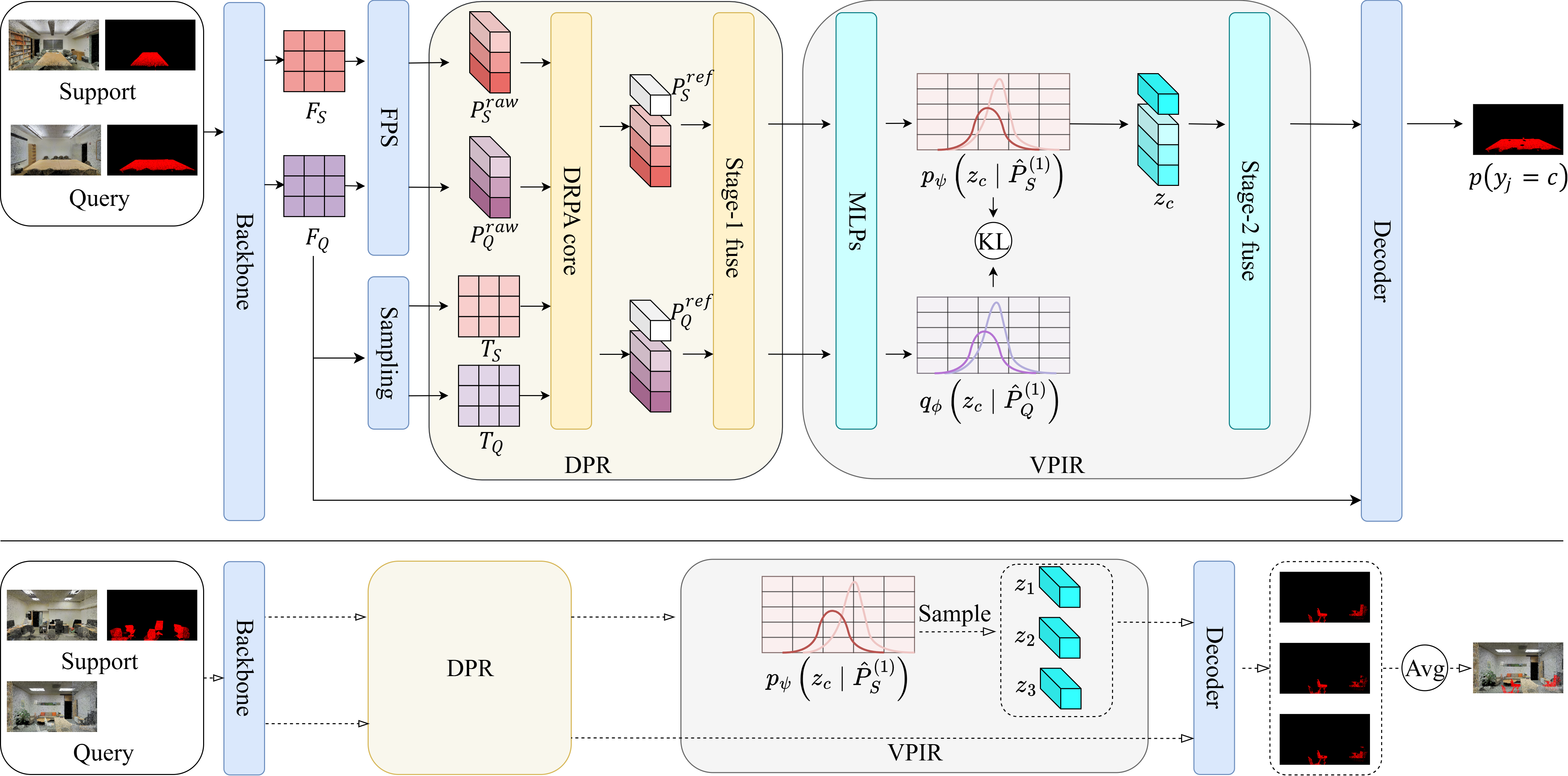

In this work, we propose UPL (Uncertainty-aware Prototype Learning), a probabilistic approach designed to incorporate uncertainty modeling into prototype learning for few-shot 3D segmentation. Our framework introduces two key components. First, UPL introduces a dual-stream prototype refinement module that enriches prototype representations by jointly leveraging limited information from both support and query samples. Second, we formulate prototype learning as a variational inference problem, regarding class prototypes as latent variables. This probabilistic formulation enables explicit uncertainty modeling, providing robust and interpretable mask predictions.

Extensive experiments on the widely used ScanNet and S3DIS benchmarks show that our UPL achieves consistent state-of-the-art performance under different settings while providing reliable uncertainty estimation.

Method

Our UPL framework consists of two key components: (i) a Dual-stream Prototype Refinement (DPR) module that enhances prototype discriminability by leveraging mutual information between support and query sets, and (ii) a Variational Prototype Inference Regularization (VPIR) module that models class prototypes as latent variables to capture uncertainty and enable probabilistic inference.

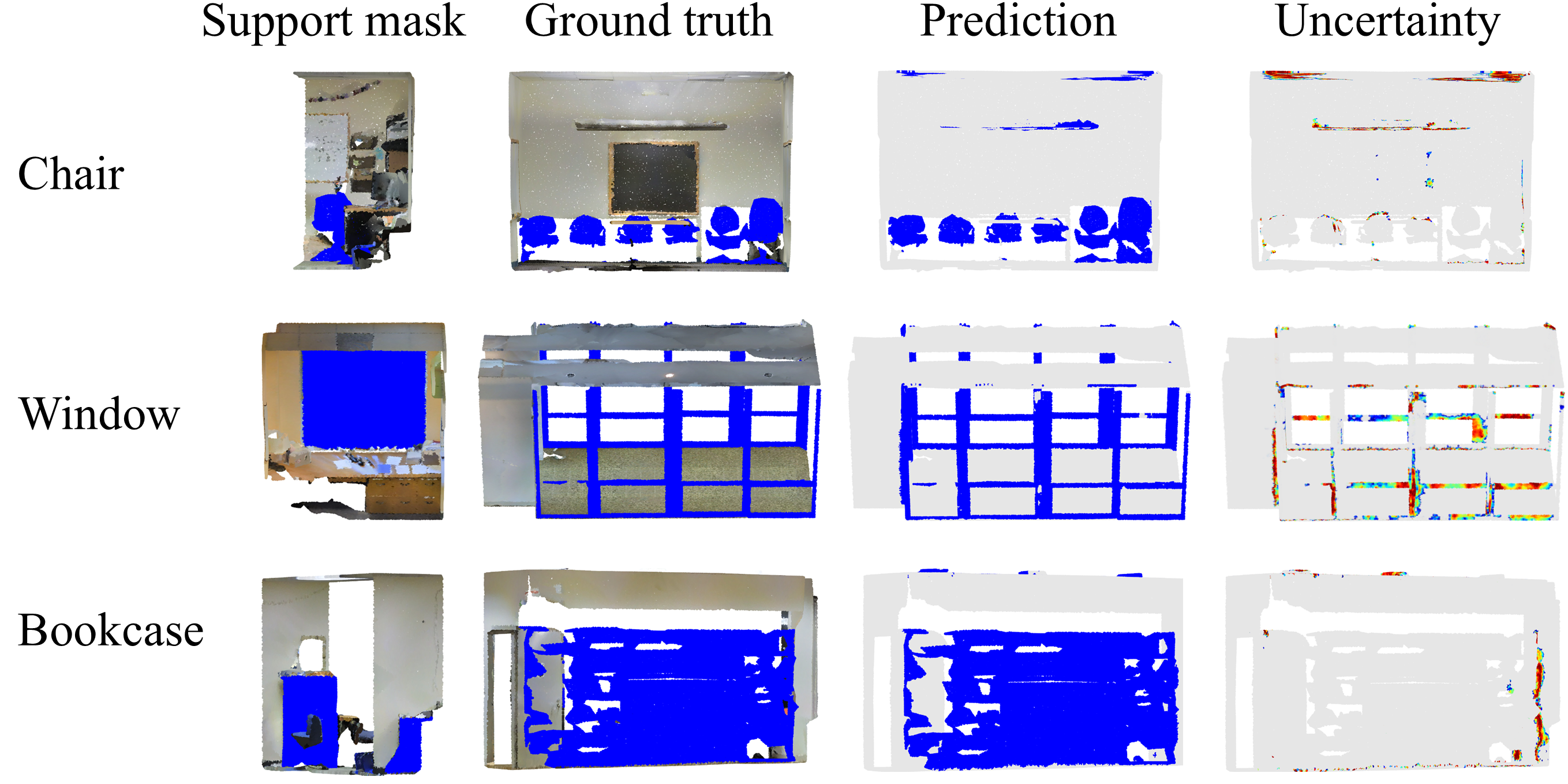

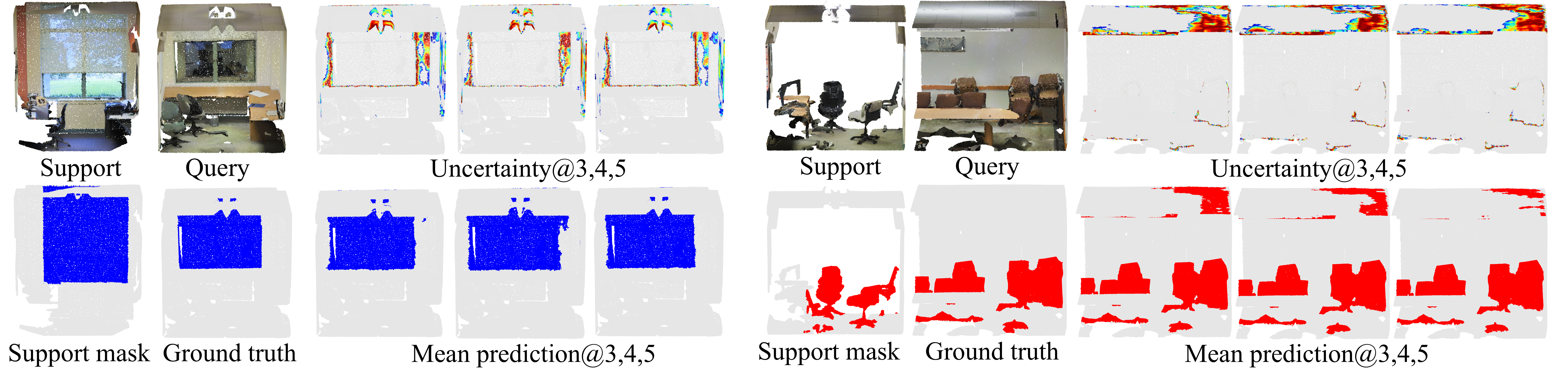

Qualitative Results

UPL produces cleaner object boundaries and provides uncertainty maps that highlight regions of occlusion and label ambiguity. The visualization shows that our method achieves strong few-shot generalization with high-quality segmentation results.

Experimental Results

We evaluate UPL on two widely used benchmarks: S3DIS and ScanNet under different few-shot settings. Our method consistently achieves state-of-the-art performance while providing reliable uncertainty estimation.

Main Results on S3DIS and ScanNet

| Dataset | Method | 1-way 1-shot | 1-way 5-shot | 2-way 1-shot | 2-way 5-shot | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S0 | S1 | Mean | S0 | S1 | Mean | S0 | S1 | Mean | S0 | S1 | Mean | ||

| S3DIS | AttMPTI | 36.32 | 38.36 | 37.34 | 46.71 | 42.70 | 44.71 | 31.09 | 29.62 | 30.36 | 39.53 | 32.62 | 36.08 |

| QGE | 41.69 | 39.09 | 40.39 | 50.59 | 46.41 | 48.50 | 33.45 | 30.95 | 32.20 | 40.53 | 36.13 | 38.33 | |

| QPGA | 35.50 | 35.83 | 35.67 | 38.07 | 39.70 | 38.89 | 25.52 | 26.26 | 25.89 | 30.22 | 32.41 | 31.32 | |

| CoSeg | 46.31 | 48.10 | 47.21 | 51.40 | 48.68 | 50.04 | 37.44 | 36.45 | 36.95 | 42.27 | 38.45 | 40.36 | |

| UPL (Ours) | 48.18 | 49.02 | 48.60 | 55.92 | 48.53 | 52.22 | 38.13 | 37.44 | 37.79 | 41.78 | 41.96 | 41.87 | |

| ScanNet | AttMPTI | 34.03 | 30.97 | 32.50 | 39.09 | 37.15 | 38.12 | 25.99 | 23.88 | 24.94 | 30.41 | 27.35 | 28.88 |

| QGE | 37.38 | 33.02 | 35.20 | 45.08 | 41.89 | 43.49 | 26.85 | 25.17 | 26.01 | 28.35 | 31.49 | 29.92 | |

| QPGA | 34.57 | 33.37 | 33.97 | 41.22 | 38.65 | 39.94 | 21.86 | 21.47 | 21.67 | 30.67 | 27.69 | 29.18 | |

| CoSeg | 41.73 | 41.82 | 41.78 | 48.31 | 44.11 | 46.21 | 28.72 | 28.83 | 28.78 | 35.97 | 33.39 | 34.68 | |

| UPL (Ours) | 43.13 | 42.87 | 43.00 | 48.48 | 45.18 | 46.83 | 32.09 | 32.68 | 32.39 | 39.65 | 37.15 | 38.40 | |

Key Findings

- Consistent SOTA Performance: UPL achieves the best results across all settings on both datasets.

- Larger Gains in Complex Settings: Particularly strong improvements in 2-way settings (+3.61 and +3.72 mIoU on ScanNet).

- Uncertainty Estimation: Provides reliable uncertainty maps that correlate with prediction errors.

- Robustness: Benefits more from additional support examples, indicating better adaptation to intra-class variation.

Uncertainty Analysis

Our framework provides interpretable uncertainty estimates that correlate with prediction errors, enabling better model interpretability. The uncertainty maps clearly highlight regions of occlusion and label ambiguity, where the model is less confident in its predictions.

BibTeX

@article{zhao2026upl,

title={Uncertainty-aware Prototype Learning with Variational Inference for Few-shot Point Cloud Segmentation},

author={Zhao, Yifei and Zhao, Fanyu and Li, Yinsheng},

journal={Under Review},

year={2026},

url={https://fdueblab-UPL.github.io/}

}IEEE Copyright Notice:

© 2026 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.

This work is submitted to ICASSP 2026 and is currently under review. Upon publication, the paper's Digital Object Identifier (DOI) will be added to this repository.